Cloudflare's workers-oauth-provider... (by Ken) is a really interesting experiment. This is - to me - the first time I've seen in-prod development driven entirely with AI, and open-sourced - with the prompts.

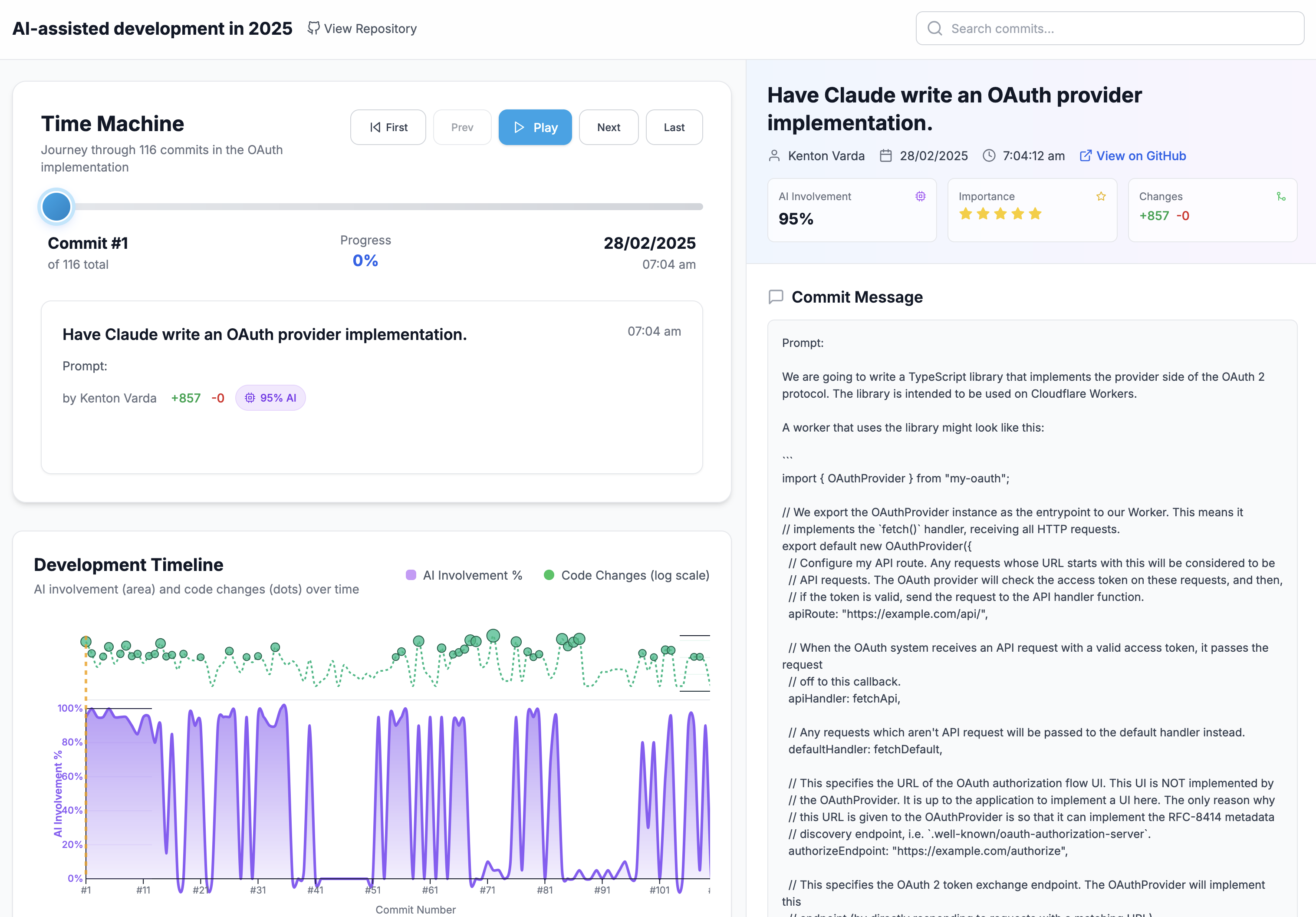

Let's look at what happened. If you want to follow along, you can use this thing Claude Code made for me: https://cf-commits-visualized.vercel.app/

Making things easier to look at

Making the site above took an hour. Here's what I did:

-

Clone the repo

-

Use Claude Code to make a gh cli script to get additional comment metadata into a json

-

Use this script to run it through gemini-2.5-flash (using Cloudflare Gateway) to get some additional analysis

-

Try making a frontend to look at everything

- Use v0 with a prompt generated by Claude Code - very functional and decent -

- Use base44 - too many unnecessary elements, almost looks like a Squarespace landing page, but doesn't actually parse or read the data properly - remember that this is the same prompt!

- Get Claude Code to make a plain html/js version that ended up being the best looking and the most functional, not to mention the fastest to load.

Some interesting things in this process:

- Using XML for structured output functioned far better than I expected, even with more complex analysis. A lot more tricks are possible in XML than with JSON, like:

- Finding tags with a direct search instead of looking for markdown blocks

- Using tags to maintain prompt adherence instead of realizing halfway through a JSON that something is missing

- Sequential processing - dragging past context through prompts - worked better than in parallel.

To explain this a little more, the two ways to process commits here would be:

- In parallel - each prompt has the commit to analyze with some context, and some output.

- Sequentially - each prompt has the results of the previous runs, and a current commit to analyze.

I found I had significantly better results with b. The tradeoff here I expected to be some confusion around which commit to focus on, but 2.5 flash didn't seem to have that issue. If you notice anything I missed in the results, let me know!

Before my thoughts, Max's thoughts... are worth a read, as are Neil's....

Thoughts

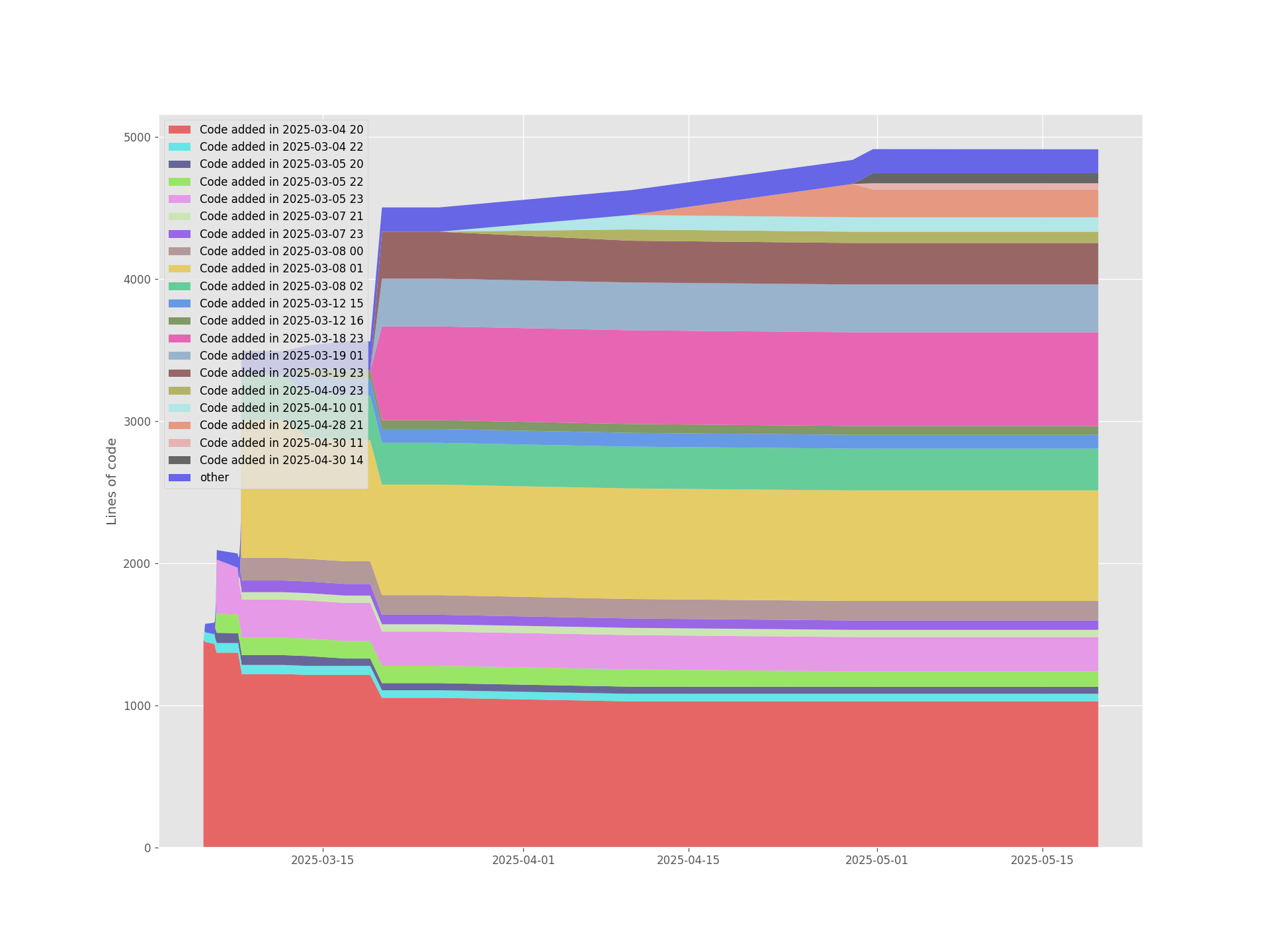

Looking at git-of-theseus, we see a pattern I've become very familiar with:

We have code being added in layers on top of each other, with not a lot of removals. I wouldn't be surprised if most of the core removals came from Ken (or were explicitly directed by Ken to CC) - from his logs it looks like this is the case.

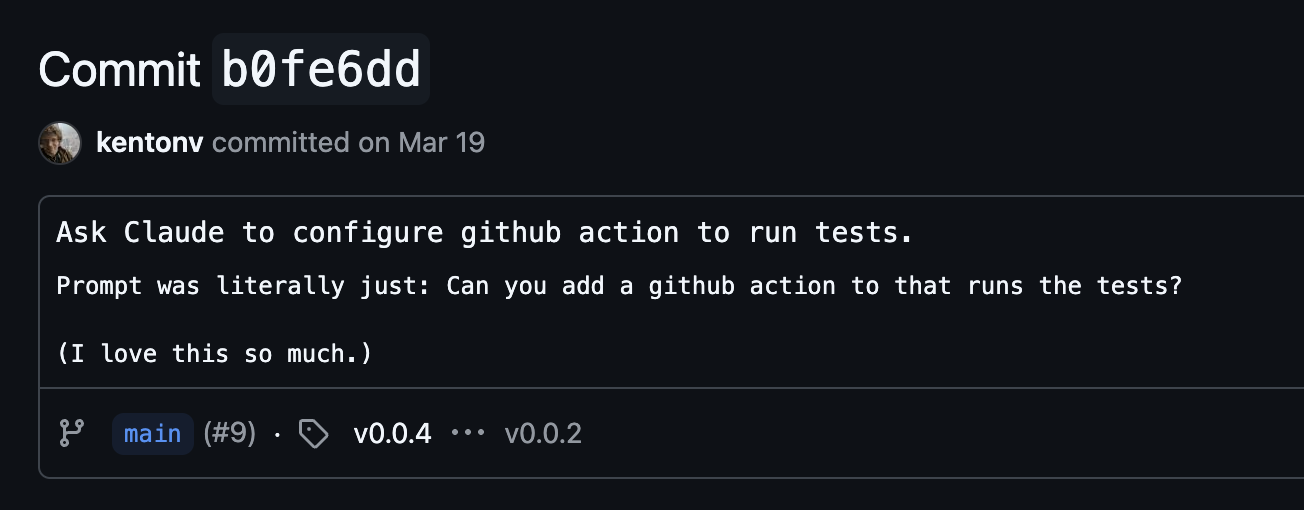

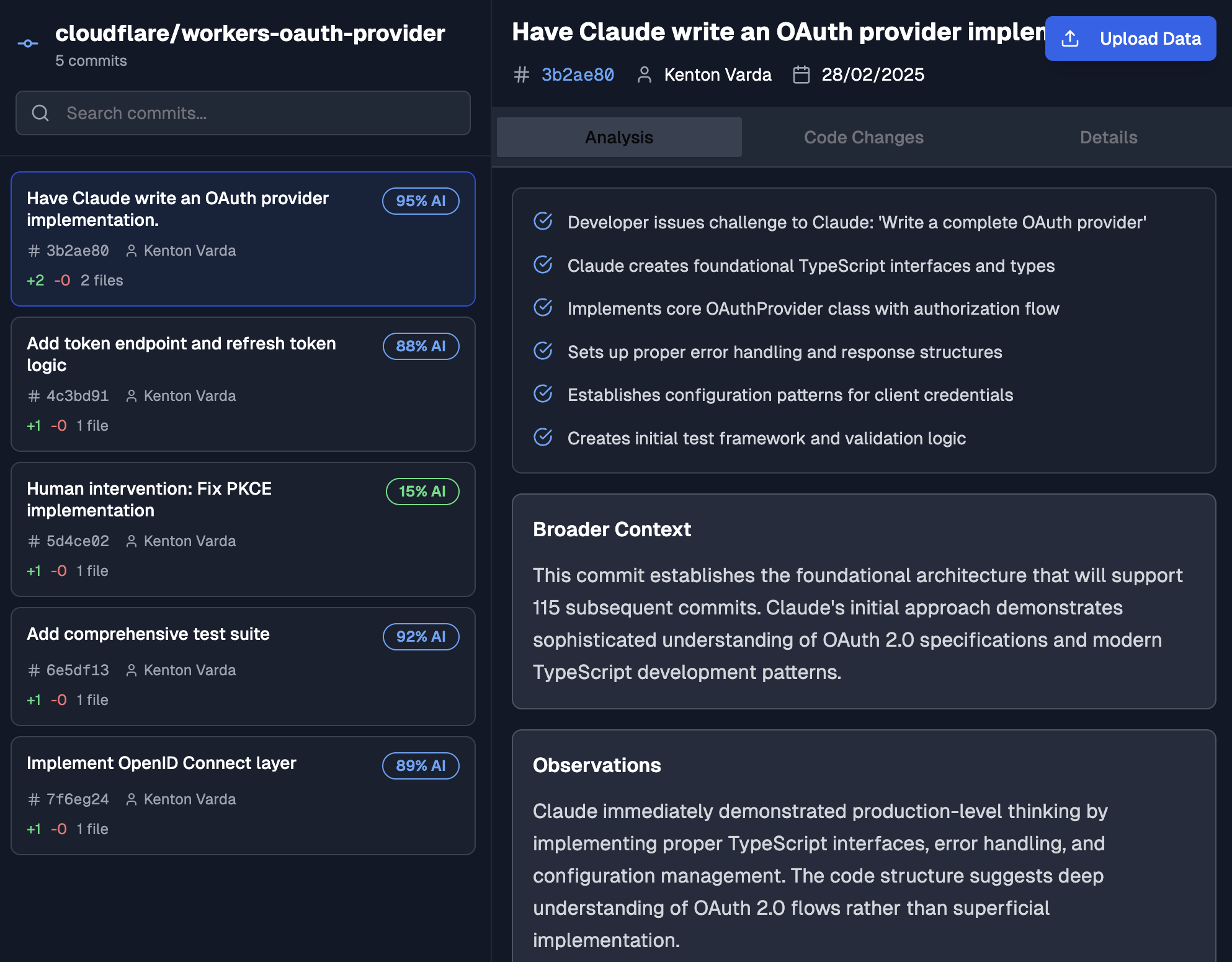

The initial commit is interesting, since it's leaving almost everything up to Claude Code. Not much is provided except the outer interface (through a user example) that we need to satisfy.

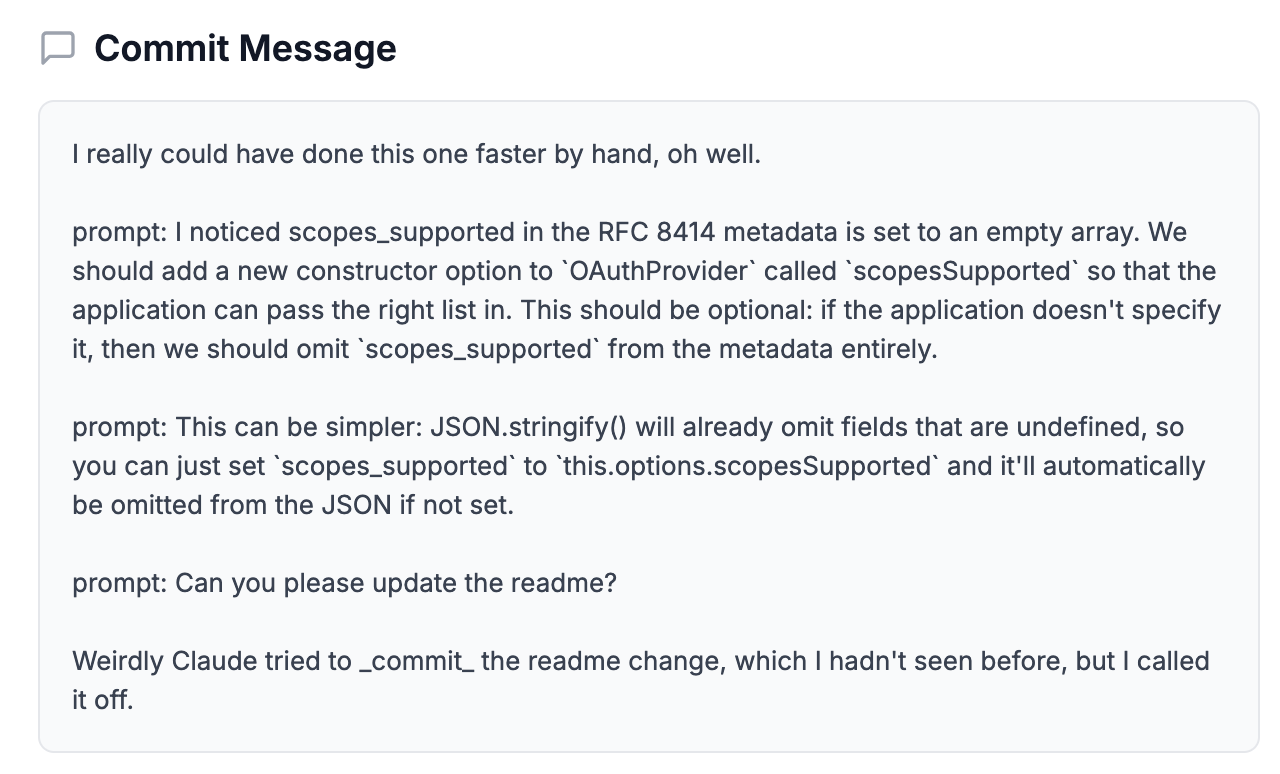

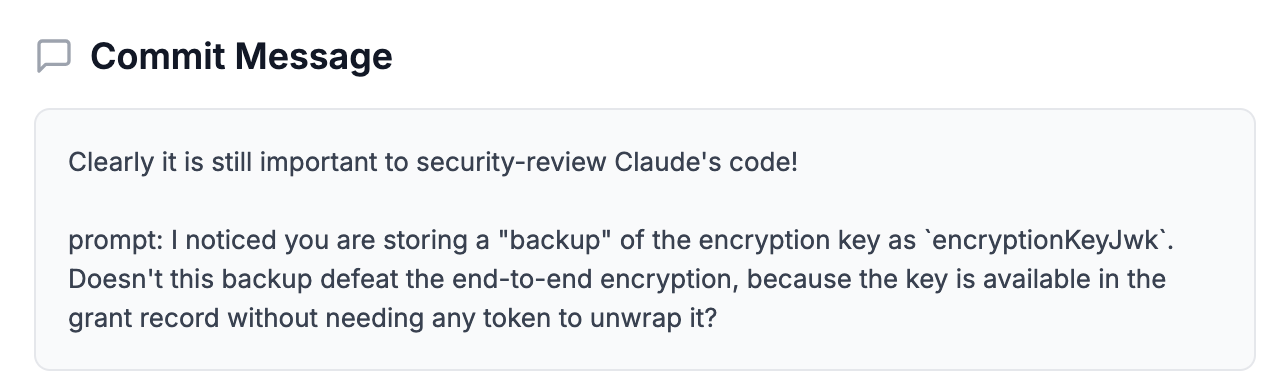

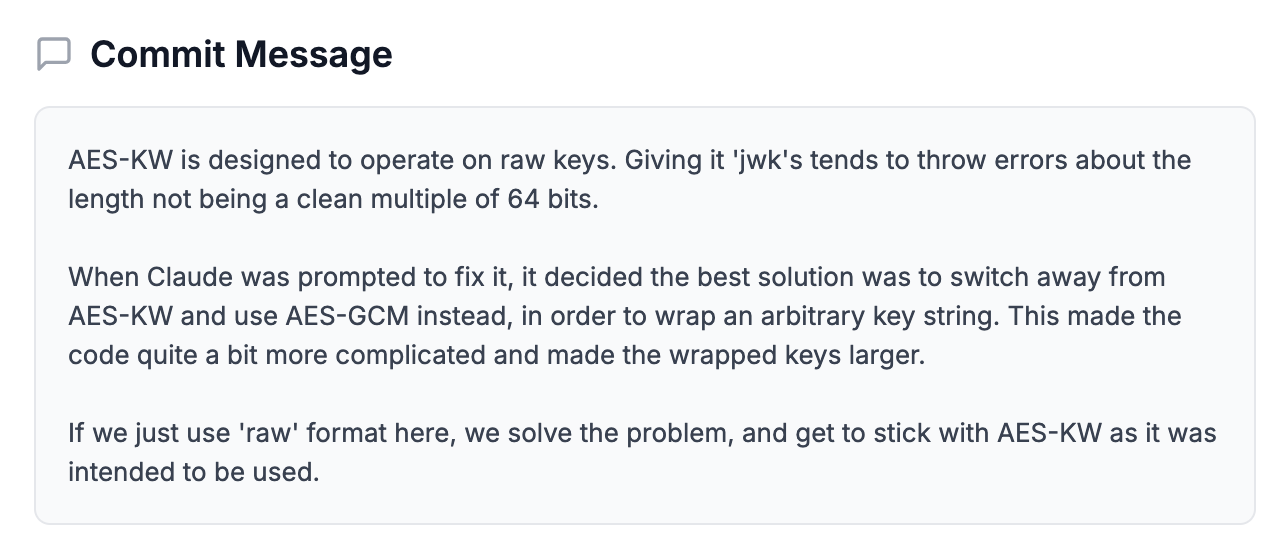

Some interesting commits:

Overall this still feels a lot like pair-coding with an eager junior. If we look at Gemini's review of the commits by level of AI-involvement, we see a graph that looks a lot like my own projects:

Write some code with AI, make some changes, make some more changes, hand control to the AI - repeat.

What's interesting is that two different general flows for coding with AI have emerged.

Pair programming with AI

The first one is to start with a broad intent - some level of spec about the output, some kind of user story - to generate some code, review and repeat. This is what Ken seems to be doing here, and it requires a few things to get to production with any meaningful level of complexity:

- A strong personal understanding of the code and what it's trying to do: without this, you'll often create issues that compound, and only get noticed much, much later in the development flow - when it's too late to change things. Pick up any of Ken's fixes, and you'll see problems that were noticed early that could have caused big problems later on:

- Thorough review of generated code before proceeding to add more functionality: I confess I might not have caught half the number of things Ken catches in his review. AI-generated code has the dangerous property of 'looking right and clean' in a way that's deceptive. It can very beautifully embed wrong things in the code that cause issues later down the road. Without careful review, this kind of change is one that I might have breezed past:

Spec-driven AI development

The second (which is the one I've found myself following) - is to start with very detailed specs. Often I'll have 10-20k words of specs, covering behavior, architecture choices, pitfalls, testing methodologies - all before calling Claude Code once. I'll write something up about this later, but it comes with its own tradeoffs.

Overall this has been really interesting. It's always insightful to review another engineer's process, what the emergent behaviors were. Especially in the context of writing production code with agentic coding tools, this is likely the first example among many.