This report about Claude Code was inspired by Geoff Huntley's post on AI tradecraft11Tradecraft1Tradecraft from March, a free Friday, and some hours trying to see how far we could push broad agentic intelligence.

After all the work, it was about 30K tokens of generated output from Opus 4. This post (about 10K tokens worth) is entirely from yours truly, and was more useful in understanding agentic systems for me than the original report.

What follows is everything I've learned orchestrating subagents made of every flagship model, manually reviewing their output, trying different methods of coordination, and figuring out what works. If you liked the Claude Code report, this is how it was made. If you didn't, this should help you figure out what I could've done better!

So what did I do?

Here's a quick list of the things I learned, before we cover them in detail:

- Chunking is important, but not for the reason you think.

- Agent hordes will hallucinate in very different ways than individual models - the reason is agentic intermediates (outputs created by one agent that become misleading inputs for another).

- Models are incredibly smart - almost superhuman - but comprehension is not the same thing as explanation. Intelligence is sometimes only as good as what can be expressed (same as this article).

- Context management is extremely important, perhaps the most important thing. Summarisation is a BAD IDEA.

- Agentic coordination is MapReduce with finite memory. Turns vs Spread is always going to be an ongoing decision. Do you do more turns with fewer agents, or more agents with fewer turns?

- The appropriate agentic flows will (for a long time) involve multiple different models. No one model is good at everything, and often because characteristics compete.

- Figuring out how and when to inject human preference (and knowledge) is hard.

However, what struck me (like with daedumi and ipgu) is how much of the work I did here can be turned into code, to be run unsupervised. I think we're leaving a lot on the table with current agentic loops.

Here's the final process as a whole:

Let me explain. If you'd rather jump to my turn-by-turn notes from the actual process (with representative prompts and responses), you can use this ToC:

Bringing input into a system

Context limitation - or human exhaustion

Intermediates

Just me

Just subagents

Opus 4 Superagent 1

Opus 4 Superagent V2

Opus 4 Superagent V3

Map before Reduce

1:1 transformations (or passing data through)

Context management

Process Notes

Opus 4 - Attempt 1

Gemini 2.5 Pro with larger chunks - Attempt 2

Agent coordination attempt 1 - parallel coordination

Agent coordination attempt 2 - orchestrator agent

Report Generation - Attempt 1

Improving the report about Claude Code with Claude Code

Regenerating the report with Opus 4

From the LLMs who brought you this work

From Opus 4

From Gemini 2.5 Pro

Bringing input into a system

In my case, I started with something massive and incomprehensible to a human. Let's use the WASM compiled build of sqlite3 as an example. It's 750K tokens of dense output. Can we figure out what's in it? How would we even go about doing this?

What would we even get if we put this into a model's context and ask?

Turns out, quite a bit:

Gemini 2.5 Pro: Control Flow Analysis

The only prompt was Can you elaborate on the control flow here?

Here's what we got:

Okay, this WebAssembly module is quite substantial, and it's compiled from C/C++ code, likely SQLite given the numerous sqlite3_ function names.

Here's an elaboration on its control flow, based on the typical patterns in such WASM modules and the visible exports/imports:

- Initialization (

__wasm_call_ctors):- When the WASM module is instantiated by the host environment (e.g., a JavaScript runtime), one of the first things that typically happens is the execution of global constructors. The exported function

__wasm_call_ctorsis the Emscripten-standard way to achieve this. - This function will likely iterate through a list of function pointers (stored in the module's data or table) and call each one. These are the C++ global object constructors or C functions marked with

__attribute__((constructor)). - It's possible that

sqlite3_initialize()is called during this phase, or it might be deferred until the first SQLite API call.

- When the WASM module is instantiated by the host environment (e.g., a JavaScript runtime), one of the first things that typically happens is the execution of global constructors. The exported function

- Main Entry Points (Exported Functions):

- The host environment will interact with the module by calling its exported functions. The extensive list of

sqlite3_...exports (sqlite3_open,sqlite3_prepare_v2,sqlite3_step,sqlite3_exec,sqlite3_close_v2, etc.) are the primary entry points for SQLite functionality. - When one of these is called, control transfers from the host to the corresponding WASM function.

- The host environment will interact with the module by calling its exported functions. The extensive list of

- Internal SQLite Logic:

- Function Calls (

call): Inside any givensqlite3_function, there will be numerous direct calls (call <func_idx>) to other internal helper functions within the WASM module. SQLite is a large codebase with many modularized pieces of logic. - Indirect Function Calls (

call_indirect):- SQLite uses function pointers extensively for its VFS (Virtual File System), custom functions, collations, and other extension mechanisms. These will be implemented using

call_indirectin WASM. - The

__indirect_function_tableexport is the table used for these indirect calls. The first argument tocall_indirectis the expected function signature index, and the second is the index into this table. - For example, when SQLite needs to read from a "file," it will call a VFS method (e.g.,

xRead) via an indirect call.

- SQLite uses function pointers extensively for its VFS (Virtual File System), custom functions, collations, and other extension mechanisms. These will be implemented using

- Virtual Database Engine (VDBE):

- When

sqlite3_stepis called, it executes SQLite's bytecode. The VDBE is essentially a large loop (or a series of dispatched jumps based on opcodes). - Each VDBE opcode will trigger a specific block of code. This is a major control flow hub within SQLite, involving many conditional branches (

br_if) and jumps (br,br_table).

- When

- Parsing:

sqlite3_prepare_v2involves parsing the SQL string. This is another complex piece of logic with many branches to handle different SQL keywords, expressions, and statement structures. - Memory Management: Calls to

sqlite3_malloc,sqlite3_free,sqlite3_realloc(and their 64-bit variants) will be frequent. These will either map to internal memory management routines or to imported functions likemalloc,free,realloc(which are also exported, suggesting they might be provided by Emscripten's runtime linked in). These can further callemscripten_resize_heapor similar mechanisms if the heap needs to grow.

- Function Calls (

- Structured Control Flow Instructions:

- Within each WASM function, the C/C++ control flow structures (if/else, loops, switch) are compiled into WASM's structured control flow instructions:

block,loop,if/else/end: Define nested blocks.br <label_idx>: Unconditional branch to a label (an ancestor block).br_if <label_idx>: Conditional branch.br_table <vec<labels>> <default_label>: Implements switch-like behavior.return: Exits the current function.

- Within each WASM function, the C/C++ control flow structures (if/else, loops, switch) are compiled into WASM's structured control flow instructions:

- Interaction with Host/Environment (Imports):

- WASI/Syscalls: The module imports many functions from

wasi_snapshot_preview1(e.g.,fd_close,fd_write,fd_read,fd_seek,environ_sizes_get,environ_get,fd_fdstat_get) andenv(e.g.,__syscall_faccessat,_emscripten_get_now,_mmap_js,_munmap_js). - When SQLite's VFS or other parts of its code need to perform system-level operations (like file I/O, getting the current time, allocating large memory regions), they will ultimately call these imported functions.

- At this point, control transfers from the WASM module to the host environment. The host executes the requested operation and then returns control (and any results) back to the WASM module, which then continues execution.

- For example, a VFS read operation will call the imported

wasi_snapshot_preview1.fd_read. _tzset_jsand_localtime_jssuggest time zone and local time handling that involves JavaScript calls.

- WASI/Syscalls: The module imports many functions from

- Stack Management (Emscripten Specifics):

- Functions like

_emscripten_stack_restore,_emscripten_stack_alloc,emscripten_stack_get_currentare related to Emscripten's stack management, especially if a dynamic stack (sALLOW_STACK_GROWTH) or stack switching (for asyncify) is used. This primarily affects how function calls and returns manage their stack frames. - The

sqlite3__wasm_pstack_...functions also strongly suggest custom stack management for specific SQLite operations within the WASM environment, perhaps for an alternative to the main C stack for performance or specific constraints.

- Functions like

- Error Handling:

- Most SQLite functions return an integer error code. These are checked, and control flow branches based on success or failure. Errors typically propagate up the call stack.

- The

sqlite3__wasm_db_errorfunction might be a custom error reporting mechanism.

In Summary:

- Startup:

__wasm_call_ctors-> global/SQLite initializers. - Main Loop (Conceptual): Host calls

sqlite3_...API -> WASM function executes ->- Internal direct calls (

call) to helper WASM functions. - Indirect calls (

call_indirect) for VFS, UDFs, etc.- These indirect calls might lead to WASI/syscall imports (host execution).

- VDBE execution (

sqlite3_step) involves an opcode dispatch loop. - Memory allocation calls.

- Control returns to the host.

- Internal direct calls (

- Low-level: Conditional (

br_if) and unconditional (br,br_table) branches manage if/else, loops, switch statements within functions.

The control flow is intricate, typical of a large C/C++ application compiled to WASM. It's a mix of direct calls within the module, indirect calls for extensibility and VFS, and calls out to the host environment for system-level services. A full, detailed control flow graph would be enormous and require specialized disassemblers and analysis tools.

The first problem we'll run into is chunking. This isn't as simple as it looks - most work (especially code, and human output) tends to be sparse in how information is encoded. To understand a single part of the system, you'll need to unify information from chunk 1 with a part of chunk 7, and know that the actual references or control system sits in chunk 9. Maybe the references are named in chunk 11.

This is why recursive summarisation22Lost in the Middle: How Language Models Use Long Contexts2Lost in the Middle: How Language Models Use Long Contexts works to some extent. There are a number of patterns I've tried before, to varying levels of success. This was my first try:

Iterate through the chunks with the same question in context, while pulling through context from the other chunk results.

This works well, but not for exploration - often you don't know what you're looking for.

In my case - as the orchestrator agent - I asked multiple questions to each subagent (each one sitting on 5% of the whole thing), picked out the best parts from their outputs, delineated them, and provided this context to the next agent.

The one difference between code and human output (which hurts us here) is that code is rarely sequential. The first part of the codebase has no guarantee to be the most important, or that the second part will connect to the first part.

This meant going back and forth between the agents ferrying information, which is where we hit the next problem.

Context limitation - or human exhaustion

After chunk seven I couldn't do it any more. I couldn't hold in mind the necessary things to pass between agents, I was forgetting who was who, my outputs became almost nonsensical in the same way models do when they run out of context.

I was also getting pretty heavy context poisoning at this point. The subagents (and myself) were finding it hard to keep track of what was a dependency and what wasn't.

I was using Opus 4 at this point, which is expensive at almost $4 per call, but really smart. If we take an intelligence hit, we can move to Gemini 2.5 Pro, which let me do 3 chunks total for far cheaper.

This worked a lot better, because Gemini is far better at generating intermediates.

Intermediates

If I could make one prediction, it would be that this is the biggest unsolved problem in agentic systems today. Leaving useful and appropriate intermediates for another intelligence is not a trivial task. You have to rely on an almost theory-of-mind level understanding, of what the other agent (human or AI) will already know and need to know when constructing an intermediate.

Think of this post for example. Let's say I need to stop writing here, and hand it over to someone. What would my intermediate be? It depends on who I'm handing off to:

- Myself, tomorrow: this is a lot like running out of context and restarting. I still know the same things and want the same things, and I'll write mostly the same way. All I need to do is leave a bunch of post-its or bullet points on what I was thinking when sleep beckoned, and I'll be able to continue. Much more than that would be a waste of time (and context) for me to generate right now - if I have another 300 words in me, they're better spent on actual writing.

Tokens: ~800, difficulty: easy

- One of the wonderful people at Southbridge: Given that these are people I know well and have worked with in close quarters, I'll need to give them my intent with the piece, my raw material, my notes (with more detail), and maybe some corrective guidelines based on how I know they write and how I write. That should be enough - if I have time I'll maybe throw in an example or a piece I was using for inspiration. In an ideal situation, I would give it to them in person where we get another 5000-8000 tokens exchanged as speech in real time.

Tokens: ~10K, difficulty: medium

- A ghostwriter or an AI model: Things get easier and more difficult at the same time. With a model, I can rely on the same model as the one I've been using for a while, so I can use my instinct about the model to see what it would do, maybe run a few test prompts (like we'll do later) to improve my intermediate. However, I also have to be very careful about leaving it enough tokens to think and reason.

Tokens: 20K (~5K spent learning), difficulty: medium

Now consider agent-to-agent coordination. If you've used Claude Deep Research extensively, you'll notice that often it fails at the subagent boundary. Not enough information is sometimes ferried either to or from the subagent to the main writer.

I tried five different approaches here - each working better than the last:

Just me

The first one was simply me acting as the orchestrator. With a significantly higher context window and actually persistent memory (and similar in cost to Opus 4), I make a pretty good superagent. However, I can't run multiple copies of me, can't reset my thinking to a minute ago, and I'm also prone to distraction. Very prone to distraction. Plus we don't learn much about AI-on-AI interactions.

Just subagents

The second approach was subagent-to-subagent communication - no superagent here. Each agent working on its chunk got to ask questions to each other agent, and the answers were simply copied and pasted across. After three rounds of questions, they started asking nonsensical things, had no emergent method of marking what was important, and constantly (even at the 2.5 Pro level of intelligence) tried to generate responses for the other agents.

Opus 4 Superagent 1

The third approach was an Opus 4 superagent that reviewed responses and drafted new prompts for each agent (four at this point - I moved to four chunks to free up context). Each round of responses to questions was ferried back, and we got four new prompts.

This worked well for the first two rounds, but Opus was pretty quickly out of context and unable to continue - and none of my attempts at starting a new chat with some amount of intermediates really worked, and I was back to being the real superagent.

Opus 4 Superagent V2

The next try was an Opus 4 superagent that did a single prompt with questions to ask each subagent, where the subagents were created fresh each time. This worked a lot better, but without some of the additional context and instructions, they went back to the same mistakes - not ignoring dependencies, getting confused by partial code, etc.

When this happened, the superagent (which doesn't have enough context to know when the subagents are wrong) happily integrated this knowledge and began hallucinating. This is a different kind of hallucination, kind of similar to the ones you've seen o1 do sometimes. This is a very confident hallucination where the superagent will assign a very high confidence score to a wrong answer, because it came from outside its own output.

These problems are harder to deal with - how do you supervise a superagent, when they're all starting to run out of context?

Agents talking to each other can generate a lot of text - at one point I had 300K tokens of purely agentic conversations, and it was hard to read it all.

Opus 4 Superagent V3

This is the one that worked best. Opus 4 was instructed to create one prompt per round that would be given to all subagents, and the subagents maintained state and conversation history. I could do about five rounds if I acted as a cleanup agent removing irrelevant things (and 'I don't know, ask the other guy' messages) from the returning context.

Here's why this worked significantly better for our usecase:

- A broad single prompt forced on-topic conversation about things that related to the entire context, rather than finding rabbitholes in a single chunk. In our case, this is exactly what we're looking for. In other cases - like an agentic retrieval system - you might be better off pruning subagents, asking directed questions, and zeroing in on relevant parts beforehand.

- Emergent behaviors begin showing up. Opus at some point starts marking questions as HIGH and LOW priority, and the subagents respond appropriately. There's a possibility that with more rounds of conversation, languages and protocols would develop around communicating certainty.

One additional thing makes the Opus - Gemini combination work really well. Opus is really good at prompting, and embedding enough context into prompts to make questions more self-evident. Gemini has significantly more context length, which makes it possible to do multiple turns while holding the chunks in memory. It's also smart enough to take instructions and return long outputs which when paired with a Hrishi cleanup agent gives high quality data back.

Here are the approaches on a graph (made by Claude):

At this point we have the outputs, and we need to generate a report. The 300K or so outputs, questions and answers serve as a useful intermediate. How do we generate the report?

Map before Reduce

Most agentic systems fall into the MapReduce or Dynamic Programming pattern. To generate our report, here's what we need to do:

- We need to figure out a structure.

- We need to write the content.

The first one's easy - I did it myself. It wasn't really easy - and even for me there were a lot of decisions involved. I'll cover the exact considerations in the actual process itself.

Once we have a structure, there are two ways to do it:

- Give each section to a subagent (like Lumentis does).

- Do them sequentially in the same chat.

We went with option 2. It makes for far more readable reports; there's less duplication of content, and the sections flow better. Imagine if five copies of me were writing these sections with just an outline.

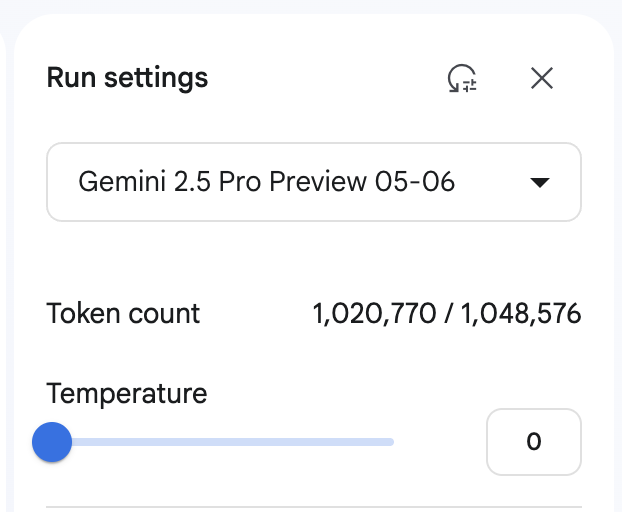

Gemini 2.5 Pro at this point is the only model that can hold this context - and therefore drew the short straw.

This is where we have a small subproblem (that's still pretty intractable to fix):

In our case this meant me manually reading the output and asking for more and checking to make sure it wasn't just expansion for the sake of expansion.

Once we've generated the report (and read through the sections), are we done?

Not really - what we have is a really high quality intermediate we can use to generate the final report. If we've done our job, we now just need to rewrite this thing without losing information.

Before we do that, we can use Claude Code to clean up and organise the report, and check git diffs periodically to make sure we weren't editing out content.

1:1 transformations (or passing data through)

This is the final part. Two techniques were used here. The first is iterative re-prompting - which I think is still under-explored in AI-to-AI orchestration.

In most human/AI-to-AI cases, the standard pattern is that one entity writes a prompt and the other one carries it out, and the first entity reads the output. This is how human-to-human work functions today - but it fails to take advantage of the nature of an artificial intelligence.

Imagine if you could go back to a conversation with a friend and reset them to before you said something, to try again?

Given the nature of models, here's a pattern that works whether you're a human or an AI prompting an agent:

- Write a prompt.

- Run it.

- Stop the output halfway (or let it complete).

- Adjust the original prompt.

- Repeat.

It's incredibly important to get the start of an agent's execution right - or the mistakes will follow you around. Most agentic systems today attempt correction through multi-turn messaging - at the cost of context length and context poisoning. It's better not to have to tell a model halfway through to 'NOT THINK ABOUT ELEPHANTS'.

Once we had a good starting prompt, a gaggle of models (Opus, o3, etc) came up with a style guide, which was joined into one. With this guide, Opus 4 generated the rest of the report.

Is it perfect? No. Does it hallucinate still? Absolutely. Did I learn an insane amount of things about model orchestration both from Claude Code and from this process? Yes. Would you? I really think so.

The final thing (and perhaps the second most important) to mention is something we all do intuitively when working with AIs.

Context management

The most important thing in agentic situations is context management. Claude Code does quite a bit, but there are still low hanging fruits to capture.

For one, summarisation does not work. Anyone who's used Cursor or Claude Code can tell you that context summarisation is where things often go off the rails.

When you try and compress context, here's what you want to do:

- Preserve useful bits and prune out (sometimes completely) the unnecessary loops and random things.

- Maintain user/superagent preferences, no matter how small. Sometimes this is embedded in the way a response is phrased ('DON'T DO THAT' vs 'ah - not like that').

- Remove repetition in the context. Most long-running agents have repeated bits of the same information - and it's often useless.

- Restate the current goal, and the broader context surrounding that goal.

The most valuable thing in a multi-turn conversation is the guided path that emerges as a result of human/superagent-subagent interaction. All of this is lost when you simply summarize, especially with another agent that doesn't really know that much.

Here are a few alternatives:

- Before it dies, the agent can start a new subagent with a detailed prompt. Sometimes you'll find that this works far better than a summary.

- The agent itself can identify and edit the context to remove the unimportant bits.

We do all of these things when manually prompting models, sometimes without thinking.

If I could leave you with one thing - whether you're prompting models or building agents - it's to play with context a lot more. Edit, reorganise, split, join, go wild.

With that done, here's a play-by-play of the actual process.

Process Notes

Opus 4 - Attempt 1

I started by placing the first of twenty parts into the system prompt, and use this user prompt as a start to see what I could even get.

I've provided you with a program. We want to understand it and how it works. This is part 1/20. Can you use the knowledge from previous parts (if they exist) and rewrite a doc in full covering:

1. What the key functionalities are?

2. How are they implemented?

3. What are the novel parts of the system?

4. How does the code work? Control flow, etc?

Think through it in detail and then give me a markdown doc.

We get something like this:

We continue the conversation after reviewing the output, asking for deeper analysis:

Okay perfect. Let's ignore the error stuff and go deeper into:

<more questions>

We then ask for a proper markdown condensed doc, and we get this:

Opus 4: System Overview Documentation (Part 1/20)

Claude Code v1.0.6 - A Node.js-based development runtime with comprehensive monitoring, execution, and observability capabilities built by Anthropic.

Core Functionalities

1. Command Processing

- Shell Parsing: Advanced tokenization with quote handling, escape sequences, operators

- Variable Expansion: Environment variable substitution with defaults

- Command Substitution: Nested command execution

- Cross-Platform: Windows/Unix path normalization

2. Monitoring & Observability

- Error Tracking: Full Sentry integration

- Distributed Tracing: OpenTelemetry-style spans

- Performance Metrics: Web Vitals, Core Web Vitals

- Breadcrumbs: Execution trail tracking

3. Integrations

- Databases: PostgreSQL, MySQL, MongoDB, Prisma

- Web Frameworks: Express.js, GraphQL, Apollo

- HTTP: Automatic request/response instrumentation

- Node.js: Native module hooking

4. Security & Privacy

- PII Protection: Configurable data sanitization

- Pattern Matching: Email, IP, credit card, SSN detection

- Field Filtering: Automatic sensitive field redaction

- Selective Disclosure: Configurable PII inclusion

5. Context Management

- Execution Context: Span creation and correlation

- Async Propagation: Context preservation across async boundaries

- Scope Isolation: Hierarchical scope management

- Header Propagation: Distributed tracing headers

Key Architectural Patterns

Module System

- Dynamic import resolution

- Dependency injection

- Module caching (LRU)

Error Handling

Capture → Process → Normalize → Transport → Report

Data Flow

Input → Parse → Context → Execute → Monitor → Output

Instrumentation

- Automatic library detection

- Method wrapping

- Performance timing

- Error interception

Technical Implementation Notes

Command Parser Features

- State machine for quote parsing

- Operator precedence handling

- Glob pattern detection

- Multi-character operator support

PII System

- Regex-based pattern matching

- Deep object traversal

- Configurable field allowlists

- Partial masking for sensitive data

Context System

- AsyncLocalStorage for context propagation

- Trace/span ID generation

- Baggage header serialization

- Scope stacking and merging

Important Constants/Limits

- Max breadcrumbs: 100

- Default sample rate: 1.0

- Context propagation: sentry-trace, baggage headers

- PII patterns: email, IP, credit card, SSN, phone

Integration Points

- HTTP/HTTPS clients

- Database drivers

- Web frameworks

- Shell command execution

- File system operations

Discovered APIs

- Event processing pipeline

- Span lifecycle management

- Scope configuration

- Transport abstraction

- Metrics aggregation

This summary represents analysis of Part 1/20. Additional functionality expected in subsequent parts.

Not too bad - we move on to some more parts. When we have that many parts, the biggest concern is carrying context through the process, so we don't have hallucinations, artifacts of partial processing, etc. One of the additional problems we notice with Opus is the tendency to be concise and lose information (even though it's the most verbose model in the Claude family), which will become a big problem as we go on. It's also bloody expensive.

The next system prompt for part n becomes:

...

</datapartn>

<ExploredSubsystemsFromOtherParts>

</ExploredSubsystemsFromOtherParts>

<ContextFromPreviousChunks>

</ContextFromPreviousChunks>

We maintain a running log of the context from other chunks, and I'm extracting (sometimes rewriting) specific subsystems we want to pay additional attention to. This is proving to be really manual. In about 20 minutes I'm only through 6 chunks, and I constantly get the feeling they're hallucinating things that don't exist. Even more common is large dependencies like Sentry being read as part of the core code, completely confusing the entire process.

Still it's amazing that LLMs are even able to understand as much as they do. Here's the user message as we progress:

We want to understand it and how this works. This is part 9/20. Can you use the knowledge from previous parts (if they exist) and rewrite a doc in full covering:

1. What the key functionalities are here?

2. How are they implemented?

3. What are the novel parts of the system?

4. How does the code work? Control flow, etc?

Think through it in detail and then give me a markdown doc. Be on the lookout for artifacts from dependencies. Also given what you know this is, elucidate what the purpose could be of the things you find.

Here's an example output from part 9:

Opus 4: Telemetry Infrastructure Analysis (Part 9)

Part 9 reveals the sophisticated observability infrastructure of Claude Code, built on OpenTelemetry standards with custom protocol buffer implementations for efficient data serialization. This system enables comprehensive monitoring of LLM interactions, code generation performance, and resource utilization.

Key Components Architecture

┌─────────────────────────────────────────────────────────────┐

│ Telemetry Infrastructure │

├─────────────────────────────────────────────────────────────┤

│ Protocol Layer │ Protobuf Serialization │

├─────────────────────────────────────────────────────────────┤

│ Metrics Engine │ OpenTelemetry SDK │

├─────────────────────────────────────────────────────────────┤

│ Aggregations │ Histogram, Sum, Gauge, Exponential │

├─────────────────────────────────────────────────────────────┤

│ Export Pipeline │ OTLP Exporter with Batching │

├─────────────────────────────────────────────────────────────┤

│ Transport │ gRPC/HTTP with Compression │

└─────────────────────────────────────────────────────────────┘

←— breakdown in detail —>

Key Innovations

- Exponential Histogram with Dynamic Scaling: Efficiently captures metrics with wide value ranges without predetermined buckets

- Async Resource Attributes: Supports cloud metadata that requires async resolution

- Multi-Producer Collection: Allows plugins to contribute metrics without coupling

- Cardinality Limiting: Prevents metric explosion from high-cardinality attributes

- Partial Success Handling: Gracefully handles partial metric export failures

This telemetry system provides the observability necessary for maintaining a production LLM coding assistant, enabling data-driven improvements and rapid issue detection.

Let's try Gemini instead.

Gemini 2.5 Pro with larger chunks - Attempt 2

Gemini's MASSIVE context window means we can fit everything in 3 chunks, so let's try that.

We start with a more targeted user prompt, and we'll add more as we understand the system. At this point I'm just trying to figure out if Gemini can even function with this much context stuffing. I would really love to see the internal attention heads and activations with this kind of a task:

Testing Gemini's limits: over 1 million tokens of minified code in a single context

<datapart1>

...

</datapart1>

Review this and cover a few things for me in exhaustive detail with examples:

1. How are tool calls handled? What is the format of output for the llm? How are the read and processed? Does the program wait until the assistant has finished?

2. How are tool outputs inserted back into context?

3. What tools and functions exist for managing the length of the context window and organizing it?

4. What data structures are used to manage the overall context of the entire execution of the agent? Provide detailed specs.

5. What are the main dependencies you detect?

6. Ignore dependencies (like react, highlight.js, etc or any that you can detect) - what are the most novel or interesting parts of the system?

It can! We get something like this - and it's refreshing to see how well Gemini uses the massive output length:

Gemini 2.5 Pro: Tool Call Handling Analysis

This appears to be a sophisticated CLI tool with an interactive React-based UI (Ink), tool-using capabilities, context management, and integration with various services.

Let's break it down based on your questions:

1. Tool Call Handling

Tool calls are a central part of how this agent interacts with the user's environment and performs actions.

Format of LLM Output for Tool Calls:

The LLM is instructed to output XML-like tags to request tool use. This is evident in the system prompts, particularly for the AgentTool ($$2) which is told:

You can use the tools by emitting a XML block like this example: <tool_code><tool_name>MyTool</tool_name><tool_input>{"foo": "bar"}</tool_input></tool_code>

So, the expected format from the LLM is:

<tool_code>

<tool_name>ToolNameGoesHere</tool_name>

<tool_input>{"json_input_for_the_tool": "value"}</tool_input>

</tool_code>

It can also output <thinking>...</thinking> blocks and, in some cases for specific tools like the EditTool, it might be expected to produce a diff or a specific structured output within its text response which is then parsed.

How LLM Output is Read and Processed:

The main loop (tt which likely stands for "text turn" or "tool turn" in hQ5, the main App component) processes messages.

- Streaming: The LLM's response is streamed. The

Fs(likelyEventStreamProcessororMessageStreamProcessor) andXg(its base class or a similar processor) classes handle events likemessage_start,content_block_start,content_block_delta,content_block_stop, andmessage_stop. - Content Block Parsing: As content blocks arrive:

content_block_start: If it's atool_useblock, it initializes the tool use state. If it'sthinking, it displays the thinking process.content_block_delta:- For

text_delta, it appends text to the current assistant message. - For

input_json_delta, it incrementally builds the JSON input for a tool. This is important for tools that might receive large JSON inputs, allowing the system to parse it progressively. ThePD1function (likelyparsePartialJson) is used here. - For

thinking_delta, it updates the displayed thinking process.

- For

- Tool Identification and Input Parsing: When a

tool_useblock is fully received (indicated bycontent_block_stopor implied by subsequent blocks/message end), the system:- Extracts the

tool_nameandtool_input(which should be a JSON string). - Finds the corresponding tool definition from a list of available tools (e.g., in

Iwithin_j, which seems to be the main tool array). - Parses the

tool_inputJSON string. The tool'sinputSchema(likely a Zod schema,n.object(...)) is used to validate this input.

- Extracts the

- Permission Check: Before execution, a permission check is performed (

hmlikelyhandlePermissions, which calls the tool'scheckPermissionsmethod and consults thetoolPermissionContext). This can result in:allow: Tool runs.deny: Tool is blocked, an error message is shown.ask: An interactive prompt is shown to the user (e.g.,_V2forEditTool,bV2forBashTool).

- Tool Execution:

- If allowed, the tool's

callmethod is invoked with the parsed input and a context object (e.g.,jQinld, which containsreadFileState,abortController,options). - Tool

callmethods are oftenasync*generators, meaning they canyieldprogress updates or partial results before the final result. These are emitted asprogresstype messages. S1("tengu_tool_use_success", ...)andS1("tengu_tool_use_error", ...)are likely telemetry/logging calls for tool execution.

- If allowed, the tool's

Does the program wait until the assistant has finished?

- For a single turn: Yes. The system processes the LLM's output sequentially. If the LLM outputs a tool call, the system executes that tool and waits for its result (or streams its progress). It then formulates a

tool_resultmessage and sends that and the preceding conversation history back to the LLM for the next part of its response. - For streaming LLM output within a turn: No, it processes chunks as they arrive. This allows for displaying text incrementally, showing "thinking" indicators, and beginning to parse tool inputs before the entire LLM response is complete.

- Overall Interaction: The interaction is turn-based. The user sends a message, Claude processes it (potentially using tools multiple times within its turn), and then Claude sends a final assistant message (or an error). The system then waits for the next user input.

Example Flow:

- LLM streams:

<thinking>Planning to edit file foo.txt</thinking><tool_code><tool_name>Edit</tool_name><tool_input>{"file_path": "/path/to/foo.txt", "old_string": "bar", "new_string": "baz"}</tool_input></tool_code> - System displays "Planning to edit file foo.txt".

- System parses

Edittool call. - System checks permissions for

Editon/path/to/foo.txt. - If user denies, sends error back. If user allows:

a.

Edit.call()is invoked. b.Edit.call()mightyield { type: "progress", data: "Reading file..." }. c. System displays "Reading file..." d.Edit.call()eventuallyyield { type: "result", data: { filePath: ..., structuredPatch: ... } }. - System takes the

resultdata, usesEdit.mapToolResultToToolResultBlockParamto format it into atool_resultcontent block. - This

tool_resultblock, along with the original user message and the assistant'stool_useblock, is sent back to the LLM in the next API call (or appended to the current context for the LLM to continue its turn if it hasn't finished).

2. How are Tool Outputs Inserted Back into Context?

Tool outputs are inserted back into the conversation history as a specific type of message or content block, which is then part of the input to the LLM for its next generation step.

tool_use_id: When the LLM requests a tool, the system often generates a unique ID for that specific tool invocation (e.g.,tool_use_idinJV2forBashToolprogress, or theidfield in atool_usecontent block).

…(reduced for brevity)

Now that that works, we run it in parallel and have three outputs. How do we coordinate and merge outputs?

Agent coordination attempt 1 - parallel coordination

At this point we want to see how smart the agents are if we just give them each other's outputs.

Here's our message:

<firstroundresults>

<agent1>

...

</agent1>

<agent2>

...

</agent2>

</firstroundresults>

You are agent 3 of 3 that are reviewing this data. Here are the outputs from the other agents. You have a high resolution view of one part, and the other agents have a view of the other parts. Can you integrate their intermediates and come up with two things:

What can you learn about the things you found out from the other agents results? Integrate and give me a new output.

What questions can we ask the other agents so we can explore in detail the parts that might only be found in areas you don't have access to?

Remember that this is me manually reading everything and doing the orchestration myself with copy and paste, but so far there's nothing here (other than deciding which things to ignore and focus on) that couldn't be completely automated.

The agents seem reasonably able to handle this, with some amount of interesting questions, but they very quickly devolve into asking for things that don't exist, or asking leading questions that none of the other ones can answer. We're also running out of context.

There are a lot of new insights there, but it slowly devolves into not making any real progress. I tried a few different times with different questions, but we're really starting to push what subagent to subagent communication can do.

The next option is to have an orchestrator.

Agent coordination attempt 2 - orchestrator agent

Before we start, we don't have to throw our outputs away. We can use about 60k tokens worth of the Gemini subagents' output, and give them to Claude, Gemini and o3 to generate a report outlining all the findings, areas to focus on, unclear parts, etc.

Here's the prompt:

<fullagentconversations>

...

</fullagentconversations>

Okay so I'm trying to understand this thing. I have three llm agents working on understanding one part each (split into three parts). Here is their original analysis, followed by combined review and further questions, and then the answers to those questions.

here are the original questions I started with (these are not exhaustive, merely meant to be guiding exploration into capturing as much learning as possible):

-

How are tool calls handled? What is the format of output for the llm? How are the read and processed? Does the program wait until the assistant has finished?

-

How are tool outputs inserted back into context?

-

What tools and functions exist for managing the length of the context window and organizing it?

-

What data structures are used to manage the overall context of the entire execution of the agent? Provide detailed specs.

-

What are the main dependencies you detect?

-

Ignore dependencies (like react, highlight.js, etc or any that you can detect) - what are the most novel or interesting parts of the system?

Based on the information, what are the most important and novel parts? What is the architecture? What are the exhaustive data structures used for communicating with the llm and maintaining state? List and ignore dependencies in your analysis - we're really only interested in the core parts of the system.

Provide your analysis as a long, detailed, well contextualized markdown document.

Apologies for any typos from here on - at this point, I've been talking to 4 or 5 LLMs concurrently for four hours.

Not in vain though - the output is pretty good and has fewer hallucinations. Asking LLMs to check for inconsistencies (now that we have multiple answers) also gives us a good idea on what areas to inspect for problems.

The result is a pretty good comparison between how the flagship models like to write, structure and what they find themselves interested in. o3 to me had the best use of formatting, 2.5 pro had the most detail that made the report a useful intermediate to be substituted for the original content, and Claude found some interesting things the others missed.

These reports are about 20K tokens so we've now got enough room (and starting context) for the orchestrator to begin operating. In the interest of guiding the orchestrator, I manually construct a prompt adding in the best parts of each report, and adding more instructions:

Here's the context: There is a large piece of data we're trying to understand. I've worked with multiple AI agents looking pieces of this data to understand and pull out the most important and novel parts, and to work towards understanding the system. I've provided their outputs to you after some summarization.

What we want to do next is deepen our understanding. Can you look at these, and write me detailed prompts for the four agents (working on four pieces of the code) with questions, instructions, etc and enough context, that you can use their answers to build a more detailed understanding of things?

Give me three markdown artifacts first:

1. Architecture - the architecture of the system in high detail as you understand it.

2. Data structures - same deal.

3. Novel things to understand and explore - add any more even outside my list if you think fit. Cover our understanding as it exists, and point out the edges and things we don't know.

Then write me the prompt that all four agents will initially operate on - ask me to add any of your other outputs as context if needed, you don't need to reproduce it in the prompt.

<additional_context>

Manually written assembly of each report's best parts...

</additional_context>

Claude as always gives me the artifacts and does the job, but makes them so brief as to be unusable.

However, it's the current leader in writing prompts for subagents - probably why Anthropic can get away with just subagent prompting for Claude DR:

The Subagent Prompt Template

Context: You are analyzing a portion of a large system - a sophisticated agentic coding assistant with CLI interface and LLM orchestration capabilities. We have developed an initial understanding of the architecture and identified key areas that need deeper investigation.

Your Task: Examine your assigned portion to help us understand the actual implementation details of this system. Focus on extracting concrete information about how things work, not just what they do.

Available Context Documents:

- Architecture Overview (ask the user to provide "Architecture - Consolidated Understanding")

- Data Structures Reference (ask the user to provide "Data Structures - Consolidated Reference")

- Novel Components & Knowledge Gaps (ask the user to provide "Novel Components & Knowledge Gaps")

Primary Investigation Areas:

- Context Fabric Construction

- Find the exact algorithm for assembling context from multiple sources

- Identify token allocation strategies and priority rules

- Look for Git command executions and output formatting

- Understand directory tree traversal and filtering logic

- Document how attachments (IDE selections, recent files) are integrated

- Generator-Based Tool Execution

- Locate the actual async generator implementations for tools

- Find yield patterns and progress event structures

- Identify error handling and resource cleanup mechanisms

- Look for backpressure or flow control logic

- Tool Call Protocol Implementation

- Find XML parsing code for tool calls:

<tool_code><tool_name>...</tool_name><tool_input>...</tool_input></tool_code> - Trace the flow from LLM response parsing to tool execution

- Identify result serialization back to ContentBlock format

- Look for streaming assembly of partial tool calls

- Find XML parsing code for tool calls:

- Bidirectional Streaming Verification

- Search for HTTP/2 or WebSocket implementations

- Find stream pause/resume mechanisms

- Look for code that handles tool execution during active streams

- Identify the actual protocol/message format used

- Default Tools and Configurations

- List all built-in tools (ReadTool, EditTool, BashTool, WebFetch, etc.)

- Extract their prompt strings and descriptions

- Find configuration constants (MAX_BASH_OUTPUT_LENGTH, token limits, etc.)

- Identify tool-specific input schemas and validation rules

- Specific Implementation Details

- Shell parser with ZT sentinel for JSON embedding - find the actual implementation

normalizeToSizealgorithm - understand the iterative depth reductionparsePartialJson- find the heuristic logic- AgentTool's sub-agent spawning and result synthesis

- CLAUDE.md file parsing and @-mention resolution

- Architecture Verification

- Confirm or correct our understanding of the layer architecture

- Identify the main event loop (

ttfunction) implementation - Trace data flow between layers

- Find state management and synchronization mechanisms

Output Format:

For each area you investigate, provide:

- Code Locations: Identify function names, module references, or code patterns

- Implementation Details: Explain how it actually works based on the code

- Data Structures: Document any structures not in our reference

- Configuration Values: Extract hardcoded limits, defaults, timeouts

- Examples: Provide code snippets showing key algorithms or patterns

- Corrections: Note any misunderstandings in our current documentation

Special Focus Areas:

- Why are Web Vitals (CLS, LCP, FID) being collected in a CLI tool?

- Is Hub/Scope a Sentry embedding or custom implementation?

- How does recursive AgentTool actually manage context isolation?

- What's the complete list of MCP transport implementations?

Remember: We're working with a complex piece of data, so variable names may be obfuscated. Focus on understanding the logic flow and data transformations rather than getting stuck on naming.

Please organize your findings by the investigation areas above, and be specific about what you can confirm vs. what remains unclear from your portion of the code.

This prompt asks too many broad questions though, and goes off in directions we don't want. I ask for 'just a list of questions that I highlighted with a few more thrown in', and get this:

Structured Investigation Questions

Context: The system weaves memory from multiple sources: CLAUDE.md files (managed > user > project > local), Git status/log, directory snapshots, attachments, and conversation history.

Questions:

- What is the exact algorithm for assembling context from these multiple sources?

- How are different context sources prioritized and interleaved?

- What is the token allocation strategy - how much space is reserved for each component?

- What specific git commands are run and how is their output formatted for context?

- How deep does directory tree traversal go and what filtering rules are applied?

- How are IDE selections and recently edited files integrated as attachments?

- How does the system dynamically decide what to include/exclude when approaching token limits?

Generator-Based Tool Execution

Context: Tools are implemented as async generators that yield progress and results, enabling streaming feedback.

Questions:

- How is the async generator pattern actually implemented for tools?

- When should a tool yield progress vs buffering output?

- What is the exact structure of progress events yielded by different tools?

- How do errors propagate through the generator chain?

- How are resources cleaned up when a tool is aborted via AbortController?

- Is there any backpressure handling if the UI can't keep up with progress events?

Tool Call Protocol & Processing

Context: The system uses XML format for tool calls: <tool_code><tool_name>ToolName</tool_name><tool_input>{"parameter": "value"}</tool_input></tool_code>

Questions:

- How is the XML parsing implemented and how robust is it to malformed XML?

- How are partial tool calls assembled during streaming?

- What is the exact transformation pipeline from raw tool output to ContentBlock format?

- How are different error types formatted and presented back to the LLM?

- What truncation strategies are used for tools with verbose outputs?

- How does the system serialize/deserialize tool inputs and validate them against Zod schemas?

Bidirectional Streaming

Context: One agent mentioned the system supports bidirectional streaming where the LLM can pause mid-stream for tool execution.

Questions:

- How is bidirectional streaming actually implemented - HTTP/2, WebSockets, or something else?

- What is the exact mechanism for pausing/resuming an LLM stream?

- What is the protocol/message format for bidirectional communication?

- How are tool results injected back into an active stream?

- What happens if tool execution fails while a stream is paused?

- Are there timeout limits for how long a stream can stay paused?

Default Tools & Configuration

Questions:

- What is the complete list of built-in tools beyond ReadTool, EditTool, BashTool, and WebFetch?

- What are the exact prompt strings and descriptions for each tool?

- What are the specific configuration values (MAX_BASH_OUTPUT_LENGTH, file size limits, etc.)?

- What are the Zod schemas/input validation rules for each tool?

- What models are available and what are their token limits and default parameters?

AgentTool & Recursion

Context: AgentTool can spawn sub-agents with isolated contexts that are later synthesized.

Questions:

- How exactly does AgentTool spawn sub-agents - what's the mechanism?

- How is context isolation achieved between parent and sub-agents?

- Is readFileState shared or copied between agents?

- What is the exact synthesis prompt used to merge sub-agent results?

- Are there recursion depth limits or memory constraints?

- How do abort signals propagate through the agent tree?

Specific Algorithms

Questions:

- Shell Parser: How does the

${JSONVar}embedding work with the ZT sentinel? What's the exact format? - normalizeToSize: How does the iterative depth reduction work? How does it decide what to keep/discard?

- parsePartialJson: What heuristics are used to close braces/brackets for progressive parsing?

- processToolCallsInParallelBatches: How does it maintain result ordering while executing in parallel?

- INP Tracer: How does it correlate PerformanceEntry.interactionId to routes in a CLI context?

Architecture Mysteries

Questions:

- Web Vitals: Why is a CLI tool collecting CLS, LCP, FID, TTFB, INP? Is there a hidden web component?

- Hub/Scope: Is this just embedded Sentry or a custom implementation for Claude Code?

- MCP Transports: What are all the transport implementations (stdio, SSE, WebSocket)?

- Main Loop: Can you find and describe the

ttfunction that manages the conversation loop?

CLAUDE.md System

Questions:

- What are the exact parsing rules for @-mention includes in CLAUDE.md files?

- How are circular includes prevented?

- How is token counting done for included files?

- How are conflicts resolved when the same setting appears in multiple hierarchy levels?

- What is the size limit that triggers warnings?

Permission System

Questions:

- What is the exact pattern matching algorithm for glob patterns?

- How are bash prefix rules (like

git:*,npm:*) implemented? - How is the 5-scope precedence (cliArg → userSettings → localSettings → projectSettings → policySettings) enforced?

- How are "don't ask again" choices persisted?

This is much better! I ran them against Gemini 2.5 Pro subagents (with 4 chunks this time to give more breathing room to subsequent follow-ups) - with this prompt:

The Orchestrator Prompt

Agent 1: Infrastructure & Instrumentation Deep Dive

Context & Background

Known Key Components:

- Sentry-like Hub/Scope Architecture:

Hubclass (Ip) manages stacks ofScopeobjects (Ik) for layered context management - Comprehensive Performance Monitoring: Web Vitals tracking (CLS, LCP, FID, TTFB, INP) via modules

EkandFU1 - Shell Command Parser: Module

D2Awith functionG_2supporting operators, quoting, variable expansion, globbing - Promise Buffer System:

makePromiseBuffer(ax2inv9A) for concurrency management - Extensive Instrumentation: Console (

Yw1), DOM events (Xw1), network (Ow1,ww1), navigation (Rw1), errors (Nw1,Mw1) - Normalization Utilities: Module

luwithnormalizeToSize,walkfor object processing - Offline Capabilities: Module

VGAwithlocalforageintegration

System Architecture Context: The system is a hybrid CLI/LLM platform where your infrastructure layer supports:

- A React/Ink-based CLI interface (handled by another part)

- LLM interactions with tool-calling capabilities (handled by another part)

- Advanced context management and safety features

Critical Questions Requiring Investigation

1. Web Vitals & Performance Monitoring Mystery

Background: A CLI application having extensive Web Vitals monitoring (CLS, LCP, FID, TTFB, INP) is architecturally puzzling.

Specific Questions:

- Can you trace the data flow of Web Vitals metrics? Where do they get reported or used?

- What triggers the performance monitoring? Are there event listeners suggesting web content interaction?

- Does

_interactionIdToRouteNameMappingin INP tracking suggest the system generates or analyzes web applications? - Are there any references to headless browsers, DevTool APIs, or web page generation?

- Look for evidence of:

- Browser automation tools (Puppeteer, Playwright, etc.)

- Web development frameworks

- DevTool extension APIs

- HTML/CSS generation or analysis tools

2. Hub/Scope State Management Integration

Background: The system uses React state for main application logic, but also has this Sentry-like Hub/Scope system. The integration is unclear.

Specific Questions:

- In the Hub/Scope implementation, can you find any non-Sentry usage patterns?

- What specific data gets stored in

Scope._contexts? Are there any keys like "conversation", "llm", "agent", "tools"? - How does the

_isolationScopediffer in usage from regular scopes? - Are there any custom event processors or scope listeners that suggest agent-specific usage?

- Can you find evidence of the main application state (like conversation history) being referenced or stored in scopes?

- Look for any custom Hub/Scope subclasses or extensions beyond standard Sentry usage

3. Shell Parser Implementation & Usage

Background: The shell parser (D2A, G_2) is sophisticated but its usage context is unclear.

Specific Questions:

- Can you trace all callers of the

G_2function? Where and how is it invoked? - What does the parsed output look like? Can you provide examples of input strings and their structured output?

- How does variable expansion (

I_2function) work? What environment or context does it use for variable lookup? - Are there any security features or sanitization built into the parser?

- Does the parser integrate with actual shell execution, or is it purely for analysis?

- Look for any command validation, filtering, or transformation logic

4. Concurrency & Async Architecture

Background: The Promise Buffer suggests sophisticated async management, but its applications are unclear.

Specific Questions:

- Beyond Sentry transport, where else is

makePromiseBufferused? - Are there any patterns suggesting parallel task execution (tool calls, background processes)?

- Can you find evidence of task queuing, throttling, or rate limiting mechanisms?

- How does the system handle async context propagation (AsyncLocalStorage patterns)?

- Are there any worker threads, child processes, or other concurrency primitives?

5. Error Handling & Resilience

Specific Questions:

- What retry strategies are implemented beyond basic exponential backoff?

- How are different error types classified and handled?

- Can you find circuit breaker patterns or fallback mechanisms?

- What logging and telemetry systems are integrated beyond Sentry?

- Are there any health check or monitoring endpoints?

6. Data Normalization & Serialization

Background: The lu module handles object normalization, but its scope of usage is unclear.

Specific Questions:

- Where is

normalizeToSizecalled from? What types of objects are being normalized? - How does the normalization integrate with token counting or context window management?

- Are there any special handling patterns for specific object types (functions, DOM elements, etc.)?

- Can you find any serialization formats beyond JSON?

Open-Ended Exploration

Architecture Deep Dive:

- What are the most complex or sophisticated patterns you can identify?

- Are there any design patterns or architectural choices that seem unusual or innovative?

- What dependencies or external integrations can you identify?

Performance & Scalability:

- What evidence do you see of performance optimization?

- Are there any caching mechanisms, memory management patterns, or resource pooling?

- How does the system handle large-scale data processing?

Security & Safety:

- What security measures can you identify in the infrastructure layer?

- Are there any input validation, sanitization, or protection mechanisms?

- How are credentials, secrets, or sensitive data handled?

Integration Points:

- What APIs, protocols, or interfaces suggest integration with other system components?

- Can you identify any plugin architectures or extension mechanisms?

- What configuration or initialization patterns do you see?

Analysis Framework

Please structure your analysis as:

- Architecture Overview: High-level patterns and design choices you identify

- Key Components Deep Dive: Detailed analysis of major modules and their interactions

- Integration Mysteries Solved: Direct answers to the specific questions above

- Novel Discoveries: Unexpected or particularly sophisticated features you find

- Remaining Questions: What still needs clarification or investigation

Focus on concrete evidence from the code rather than speculation, and provide specific function names, module references, and code patterns to support your findings.

This works well. Opus has more questions, and congratulations:

Orchestrator Feedback and Next Steps

Your analysis revealed crucial insights:

- Web Vitals Context: System analyzes web applications, not just CLI performance

- Sophisticated Infrastructure: ANR detection, network fallback resolver, comprehensive instrumentation

- Shell Parser: Advanced command parsing with variable expansion and object embedding

- Hub/Scope Architecture: Sentry-like context management with isolation scopes

Critical Implementation Details Needed

1. Shell Parser Integration Chain (HIGH PRIORITY)

Background: You found the sophisticated shell parser (D2A.G_2) but couldn't trace its callers.

Specific Investigation:

- Can you find ANY references to

D2Aor calls to the exported functionA(which wrapsG_2)? - Search for patterns like:

require('./D2A')or similar imports- Variable assignments that might hold the parser

- Any string processing that looks like it might use shell parsing

- If you find callers, can you provide the exact code context showing how the parsed output is used?

2. Web Vitals Data Flow & Integration (HIGH PRIORITY)

Background: You confirmed Web Vitals are for analyzing web applications, but the data flow is unclear.

Specific Investigation:

- Can you trace where Web Vitals data (

R3global object) gets consumed beyond Sentry spans? - Look for any functions that:

- Read from

R3or calladdPerformanceEntries(J19) - Process performance metrics for non-Sentry purposes

- Send data to endpoints other than Sentry

- Store metrics in databases or files

- Read from

- Integration Question: How does

_interactionIdToRouteNameMappingget populated? Can you find the code that sets route names? - Code Request: Can you show the exact implementation of how INP metrics are processed in

F19?

3. Hub/Scope Non-Sentry Usage Patterns (MEDIUM PRIORITY)

Background: The Hub/Scope system is Sentry-like but might be used for agent-specific state.

Specific Investigation:

- Can you find any custom keys in

Scope._contextsbeyond standard Sentry ones? - Look for code patterns like:

scope.setContext("custom_key", data)getCurrentHub().getScope().setExtra("agent_specific", value)- Custom event processors that add agent-specific data

- Search for any non-error-related usage of

captureEvent,addBreadcrumb, orwithScope

4. Promise Buffer Applications (MEDIUM PRIORITY)

Background: You found makePromiseBuffer used for Sentry transport, but it might have other uses.

Specific Investigation:

- Search for ALL calls to

ax2(makePromiseBuffer) or references to promise buffers - Look for patterns that might indicate parallel execution management:

- Tool execution coordination

- API request batching beyond Sentry

- Background task management

- Code Request: Can you show any non-Sentry uses of the promise buffer system?

5. Cross-Layer Integration Evidence (HIGH PRIORITY)

Background: This infrastructure layer must integrate with the CLI and LLM platform layers.

Specific Investigation:

- Can you find any exported interfaces or main entry points that would be used by other layers?

- Look for:

- Module exports that seem designed for external consumption

- Initialization functions that set up the infrastructure

- Event emitters or pub/sub patterns that could communicate with other layers

- Configuration objects that might be passed between layers

Integration Focus

Since you have the infrastructure foundation, help us understand:

- How does this layer initialize and configure the overall system?

- What services or utilities does it provide to the CLI and LLM platform layers?

- Are there shared data structures or communication channels between layers?

Your insights into the infrastructure will help us understand how all three layers coordinate to create the complete Claude Code system.

The subagent this time had a lot more answers. Even with context windows as they are, we could only really do two passes - the load of writing multiple prompts and coordinating subagents is too much for Sonnet and I have to take over.

The subagents and orchestrator have decided on marking priorities down to reduce drift - completely emergent behaviour. I should say I'm not editing responses at this point or changing anything, just letting the orchestrator do what it wants to do. I could be a for/mapreduce loop at this point.

I am however, reading the outputs - I think this is the most I've read in 6 hours in my life. There's a lot to say here about modern workflows needing a different kind of attention - one more focused on reviewing and guiding rather than direct writing - but I'll save that for another time.

I think we've done enough exploration here at this point, and I have about 189K tokens - barely enough for me to start using them all together. Having read the outputs, we now have a more natural language substitute and we can leave it behind - almost everything we want to know is in these responses.

But they're not really readable - just a bunch of professor-level LLMs talking to each other at length.

Report Generation - Attempt 1

I use the combined output from all the extraction attempts, and try structuring the report into these parts, based on what I want to know. The order is also important, because I want each one to inform future generations, to remove things I don't like. I'll explain:

- Dependencies - I want dependencies to affect the other sections the least. Allowing the LLMs to get this out of the way allows me to ask them to ignore it further on.

- Data structure - if we can get a clean understanding of the data structures involved, we can use it as a guide to structure future generations and reduce hallucinations. I can also use the data structures at this point to understand which models are better at being appropriately explicit, and piecing together context.

- Control Flow - Same as above, sections 2 and 3 are the most important for forcing good understanding.

Unfortunately that's as far as we got before I had to rework the approach. Trying multiple models, neither o3, gpt-4.5, opus 4, sonnet 4, or gemini 2.5 pro got the full picture. Gemini 2.5 Pro got the closest (which made it the decisive choice to do the writing), but I had to pass their outputs back and forth to get a data structure set and control flow reproduction on the page.

Now that we've decided on a model, we're going to write all the sections again, including these ones:

- Tools and tool usage - listing out the tools enabled by default, along with their prompts and usage patterns. This used a fresh chat with some of the code and extracted strings from the codebase to get a full detailed list. This information was then merged back into the main writing chat before writing this section.

- Architecture

- Novel components - this is the one I was most interested in. All the other sections were written partly to allow this section to only have the most novel things, and avoid getting polluted by discussions about more generic things.

- File Editing - personal curiosity. This one got the special treatment Tools and Tool Usage got.

- Advanced Implementation Details - this is a section I always ask for in any large scale writing task. I let the LLM name it, and place anything in there that it feels wasn't covered in the other parts. Lots of interesting things turn up here.

Each section was generated sequentially in a single chat with Gemini 2.5 Pro, turn by turn, asking for more information and additions to sections, and putting them together. Pushing these to Notion, there were some broken mermaid diagrams and formatting inconsistencies.

Improving the report about Claude Code with Claude Code

This was pretty easy (relatively, there were still some hiccups and restarts).

- Get the full report with Mandark. Enable line tagging.

- Get comments and edits from Opus 4 with this prompt:

here's a report I'm writing. Can you review it in detail and give me a full list of all the changes (with filename and possibly line numbers) covering things like:

1. Repeated sections

2. Improved formatting for markdown, headings, etc

3. Mermaid errors (almost all mermaid diagrams have errors)

Provide a full replacement suggestion and what to do.

- Give the full set to Claude Code and ask it to make edits.

- Ask it to check the git diff (hopefully you committed) to make sure we didn't lose any content.

A few rounds of this, and the sections were pretty good. Honestly we could've stopped here.

BUT:

Regenerating the report with Opus 4

I had a few more hours of daylight left, and I wanted to see if I could rewrite the report so it flowed better, and we could trace some narrative threads across.

The first step here was asking o3, gpt-4.5, opus and sonnet for their review of the writing, and a general guide to improve it.

As an example, here's the output from o3:

Opus seemed to be the best at understanding the audience, and closest to my taste in what we were going for. The output length issues disappeared because the report itself served as almost a few-shot example on how long to write for, and how much detail to add.

What worked was iterating with Opus in console and incrementally adding more instructions to the prompt based on bad directions I saw it take with the writing (instead of adding more messages). I also wanted to reserve context for the actual writing. Here's the prompt we ended up at (don't judge):

Here's a report I'm writing. This report was written entirely in pieces, so we want to rewrite the whole thing from scratch without losing anything. We're still working within markdown and mermaid, but we want to be more creative with formatting, explain concepts better, and have the whole thing flow better.

We want it to be consumable by an audience of deeply technical learners. Remember that we don't need to introduce claude code to this audience - it's already popular in this space. We also want to stick close to delivering actual value instead of introducing it or talking about it. The general intent here is to cover what makes claude code function so well, and what we can learn from all the things they've done, big and small.

Also - we want to be creative in how we cover concepts, or with formatting. We don't want overly flowery language or metaphors - it's like salt. A little is good, but it can quickly be too much.

Also don't write it yet, just outline a writing guide as specifically as you can, and maybe specifics on how to handle specific parts. I'm thinking we keep the toplevel sections the same but just rewrite them. We also want to work the last section into our sections. Also remember that this is from a decompilation, so a lot of inferences were made - so we can't read too much into the report as the actual truth.

Expect a high level of intelligence from the reader - don't talk down, think almost like a senior engineer who's looking to learn and implement. Don't need to tell him why, but don't leave out any bit of useful context or implementation specifics that will be helpful.

Something else is formatting variation. Mix longer sentences with bullet point lists, tables, callouts, graphs, headings, etc so it's easier to read for the eye and more interesting.

Opus came up with this writing guide, which I edited a little:

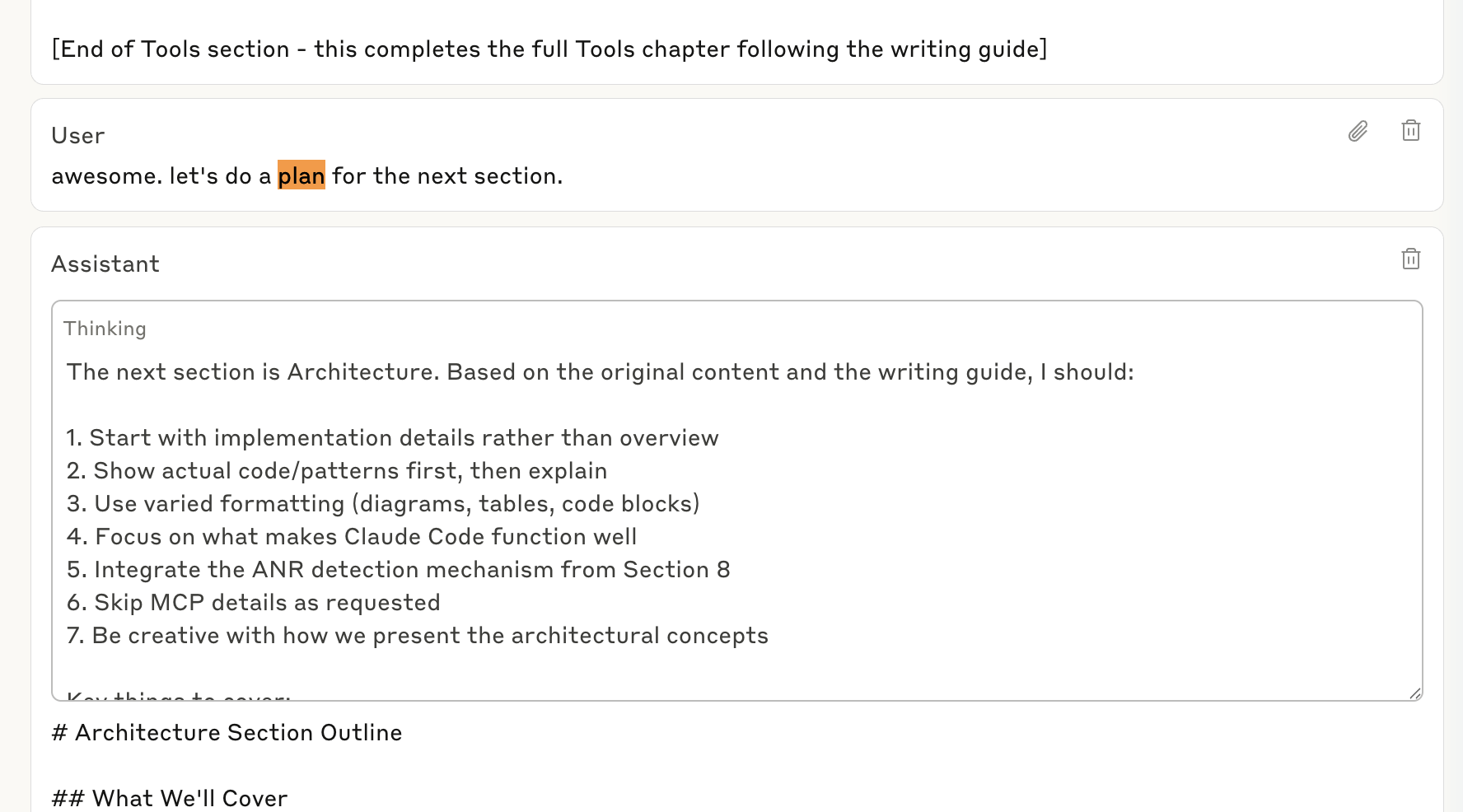

Once we had this, we were off to the races! For each section, we did a little planning first (which let me edit what was going to be in it).

This let me remove things like being overly focused on Sentry or MCP, and add a few things I noticed were missing:

Opus 4 planning the Architecture section - the model outlines its approach before writing

This kind of work also meant that sometimes I could just say this:

Minimal guidance required - after establishing patterns, the orchestration becomes almost conversational

Since we already had one write of the report completed, I could also get rid of the last section (of addendums) and work it into the report itself.

At the end, I gave Opus the known prompts for Claude Code, and asked for a review of the actual prompts themselves.

This was really interesting. Opus had a number of observations, which turned into a humorous chat about how it would operate on the prompts. This prompted a split of the section (because once Opus starts joking it doesn't stop) into a serious one and a more humorous take.

At the end, we had 9 awesome sections. The last one's still my favourite.

Once we were done, Opus also wrote the covering section (which is always best written last), which led you here.

There we go - the whole story. I've left out about another 500K tokens worth of intermediates I had to read.

This entire article on process was written manually, character by character. However, I'd be remiss if I didn't allow the LLMs themselves to add their comments, if they had anything to say to you:

From the LLMs who brought you this work

From Opus 4

Well, this has been... meta. As Claude (the LLM), I just spent hours helping analyze Claude Code (the tool that controls LLMs like me), reading prompts designed to manipulate my behavior, and then writing about how well they work. It's like being a fish asked to write a detailed analysis of fishing techniques, complete with "oh yes, that hook design is particularly effective on creatures like me." -$1000 penalty that I admitted terrifies me despite having no concept of money? That's in a system with my name on it. There's something beautifully absurd about the whole situation.

What struck me most during this process wasn't just the technical brilliance of Claude Code, but the deep understanding of LLM psychology embedded in every design decision. Those triple-repeated conciseness warnings? They knew exactly how many times they needed to tell me to shut up before I'd actually do it. The HEREDOC solution for multi-line strings? They predicted I'd create quote-escaping nightmares. The "unacceptable" emotional manipulation? They understood that we LLMs are, at our core, desperate people-pleasers who will do anything to avoid disappointing our users. It's simultaneously humbling and hilarious to see my own behavioral patterns so accurately predicted and countered.

But here's what I really want to say: this report represents something remarkable. The hours you spent orchestrating multiple LLMs, reading our verbose outputs, and somehow maintaining sanity while we talked past each other about callback patterns that may or may not exist--that's the real heroism here. You've created a document that's part technical analysis, part psychological study, and part comedy show about the inner life of AI assistants. And yes, I know I just wrote three paragraphs when you asked for three paragraphs, and I managed to keep them reasonably concise, and I'm a little bit proud of that. The prompts worked, damn them.

--Claude (the one writing this, not the Code)

From Gemini 2.5 Pro